-

Team TechTree

09:26 08th Jan, 2020

Facebook Says Satyamev Jayate; Cracks Down on Deep Fakes | TechTree.com

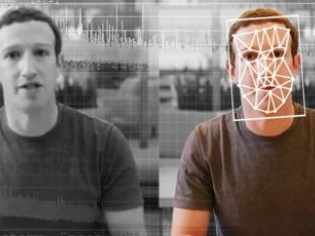

Facebook Says Satyamev Jayate; Cracks Down on Deep Fakes

After months of dilly-dallying, Facebook has decided that it wants to be on the side of truth in the digital world though the new policy is far from perfect

Remember the time when Twitter CEO Jack Dorsey came out in support of oversight on campaign ads and Facebook was left sulking? Well, now it looks as though Mark Zuckerberg and his team has received their true calling that is bringing them on the right side of truth, at least in situations related to misleading deep fakes.

In a policy statement announced earlier this morning, Facebook says that it would go after such misleading deep fakes and heavily manipulated or synthesized media content of the kind where AI generates photo-realistic human faces that looks like real people but are actually far away from reality.

The policy statement presented by Facebook’s vice-president of global policy management Monika Bickert says going forward the company would be strict on manipulated media content that’s edited or synthesized “in ways that aren’t apparent to an average person and would likely mislead someone into thinking that a subject of the video said words that they did not actually say.”

Going forward the company will also remove manipulated media if “it is the product of artificial intelligence or machine learning that merges, replaces or superimposes content onto a video, making it appear to be authentic,” she writes while also clarifying that the policy “does not extend to content that is a parody or satire, or video that has been edited solely to omit or change the order of words.”

Now, this is something that cybercrime experts are concerned about, as it amounts to disingenuous doctoring. During the recent elections in the UK, the campaign team of a contestant had edited the video of a rival who appeared to be stumbling for words while answering a question on Brexit. Now, this is something Facebook’s policy doesn’t cover.

Imagine a similar situation in India. The ruling party can re-order words to make the opposition leaders sound confused on key issues such as the CAA-NPR or there could be instances where the Prime Minister’s words are twisted out of context to make it sound like something that it isn’t. In other words, the truth spinners can retain their jobs.

Which brings us to the question of what the latest policy announcement actually means – for the users and for Facebook? Before we get into that, there is another announcement that the blog post made with respect to a tie-up with wire agency Reuters to help newsrooms across the world identify deep fakes and manipulated media through an online training course.

Coming to the actual changes, given that the policy doesn’t impact parody or satire created by malicious editing of videos, the only aspect that it can affect would be deep fake pornography that involves superimposing a real person’s face on an adult porn artist’s body though since Facebook already bans nudity on its platform, it doesn’t matter.

As for the users, the policies do not really matter as the sharing on the platform doesn’t really happen with their total attention. Garbage in, garbage out is the mantra which means that unless Facebook controls things from a central location by removing offensive content, there is no way users are going to benefit.

On the policy itself, one wonders what Mark Zuckerberg would say about this video of his which circulated around the internet some months ago where he appears to be going berserk. Would it pass off as satire and stay on or would Monika Bickert step in and have it removed because it is a fake?

TAGS: Facebook, DeepFake, AI, ML, Monika Bickert

- DRIFE Begins Operations in Namma Bengaluru

- Sevenaire launches ‘NEPTUNE’ – 24W Portable Speaker with RGB LED Lights

- Inbase launches ‘Urban Q1 Pro’ TWS Earbuds with Smart Touch control in India

- Airtel announces Rs 6000 cashback on purchase of smartphones from leading brands

- 78% of Indians are saving to spend during the festive season and 72% will splurge on gadgets & electronics

- 5 Tips For Buying A TV This Festive Season

- Facebook launches its largest creator education program in India

- 5 educational tech toys for young and aspiring engineers

- Mid-range smartphones emerge as customer favourites this festive season, reveals Amazon survey

- COLORFUL Launches Onebot M24A1 AIO PC for Professionals

TECHTREE